728x90

stress 명령어

- 지정된 하위 시스템을 지정된 부하에 주는 도구

stress 패키지 설치

yum install -y stressstress --version$ stress --version

stress 1.0.4stress 명령어 옵션

stress --cpu 8 --io 4 --vm 2 --vm-bytes 128M --timeout 10s$ stress --help

`stress' imposes certain types of compute stress on your system

Usage: stress [OPTION [ARG]] ...

-?, --help show this help statement

--version show version statement

-v, --verbose be verbose

-q, --quiet be quiet

-n, --dry-run show what would have been done

-t, --timeout N timeout after N seconds

--backoff N wait factor of N microseconds before work starts

-c, --cpu N spawn N workers spinning on sqrt()

-i, --io N spawn N workers spinning on sync()

-m, --vm N spawn N workers spinning on malloc()/free()

--vm-bytes B malloc B bytes per vm worker (default is 256MB)

--vm-stride B touch a byte every B bytes (default is 4096)

--vm-hang N sleep N secs before free (default none, 0 is inf)

--vm-keep redirty memory instead of freeing and reallocating

-d, --hdd N spawn N workers spinning on write()/unlink()

--hdd-bytes B write B bytes per hdd worker (default is 1GB)

Example: stress --cpu 8 --io 4 --vm 2 --vm-bytes 128M --timeout 10s

Note: Numbers may be suffixed with s,m,h,d,y (time) or B,K,M,G (size).CPU 부하 테스트

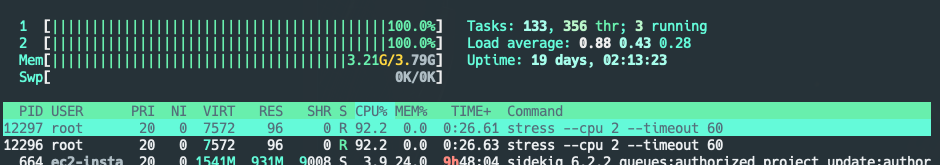

stress --cpu 2 --timeout 60$ stress --cpu 2 --timeout 60

stress: info: [12295] dispatching hogs: 2 cpu, 0 io, 0 vm, 0 hdd

...

stress: info: [12295] successful run completed in 60s

ps -C stress -o command,pid,ppid,%cpu,psr,wchan$ ps -C stress -o command,pid,ppid,%cpu,psr,wchan

COMMAND PID PPID %CPU PSR WCHAN

stress 18648 28913 0.0 1 -

stress 18649 18648 94.0 0 -

stress 18650 18648 94.1 1 -ltrace -ttT -p 18650$ ltrace -ttT -p 18650

21:47:14.609844 rand() = 1780437465 <0.000132>

21:47:14.610098 rand() = 1569459610 <0.000130>

21:47:14.610348 rand() = 1517477923 <0.000130>

21:47:14.610600 rand() = 1915553563 <0.000130>

21:47:14.610852 rand() = 777724621 <0.000138>

21:47:14.611143 --- SIGALRM (Alarm clock) ---

21:47:14.611246 +++ killed by SIGALRM +++728x90

Memory 부하 테스트

stress --vm 2 --vm-bytes 128M --timeout 60s$ stress --vm 2 --vm-bytes 128M --timeout 60s

stress: info: [13036] dispatching hogs: 0 cpu, 0 io, 2 vm, 0 hdd

...

stress: info: [13036] successful run completed in 60sps -C stress -o command,pid,ppid,rss$ ps -C stress -o command,pid,ppid,rss

COMMAND PID PPID RSS

stress --vm 2 --vm-bytes 25 19957 28913 80

stress --vm 2 --vm-bytes 25 19958 19957 203244

stress --vm 2 --vm-bytes 25 19959 19957 26368ltrace -ttT -p 19959$ ltrace -ttT -p 19959

21:52:15.241869 malloc(268435456) = 0x7fe17e4c6010 <0.000296>

21:52:15.645915 free(0x7fe17e4c6010) = <void> <0.059945>

21:52:15.710023 malloc(268435456) = 0x7fe17e4c6010 <0.000391>

21:52:15.968379 free(0x7fe17e4c6010) = <void> <0.047908>

21:52:16.016446 malloc(268435456) = 0x7fe17e4c6010 <0.000208>

21:52:16.144638 --- SIGALRM (Alarm clock) ---

21:52:16.157518 +++ killed by SIGALRM +++

vmstat 3$ vmstat 3

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 0 374996 0 244360 0 0 9 16 11 4 2 0 96 0 1

0 0 0 369780 0 244368 0 0 0 0 477 849 7 1 92 0 1

0 0 0 370188 0 244448 0 0 0 0 824 1493 8 1 88 0 4

2 0 0 259080 0 244472 0 0 0 0 610 952 8 19 69 0 4

2 0 0 360232 0 244500 0 0 0 8 692 711 24 76 0 0 0

2 0 0 268796 0 244864 0 0 116 0 985 1300 27 73 0 0 0

3 0 0 294896 0 244956 0 0 0 136 727 803 24 76 0 0 0

2 0 0 270288 0 244952 0 0 0 4 892 1846 29 71 0 0 0

2 0 0 205408 0 245096 0 0 41 0 783 982 26 74 0 0 0

2 0 0 205368 0 231604 0 0 64 127 772 1039 28 72 0 0 0

2 0 0 128268 0 233584 0 0 1056 422 1538 2935 33 67 0 0 0

3 0 0 246564 0 233736 0 0 0 0 775 814 24 76 0 0 0

5 0 0 286316 0 233728 0 0 0 0 1019 1984 33 67 0 0 0

6 0 0 226428 0 233756 0 0 1 0 811 993 28 72 0 0 0

2 0 0 233912 0 233784 0 0 0 0 741 750 25 75 0 0 0

2 0 0 245772 0 233800 0 0 0 287 879 1195 26 74 0 0 0

3 0 0 283944 0 234088 0 0 80 38 781 912 26 74 0 0 0

2 0 0 265888 0 234080 0 0 0 0 767 1624 31 69 0 0 0

2 0 0 362268 0 234104 0 0 0 0 791 913 23 77 0 0 0

2 0 0 197968 0 234128 0 0 0 0 698 715 25 75 0 0 0

2 0 0 204236 0 234912 0 0 251 287 1053 1460 26 74 0 0 0

3 0 0 330772 0 235008 0 0 0 0 734 852 25 75 0 0 0

2 0 0 283364 0 235000 0 0 20 0 907 1945 36 65 0 0 0

0 0 0 388020 0 235520 0 0 137 0 736 1050 19 54 28 0 0DISK 부하 테스트

stress --hdd 3 --hdd-bytes 1024m --timeout 60s$ stress --hdd 3 --hdd-bytes 1024m --timeout 60s

stress: info: [15227] dispatching hogs: 0 cpu, 0 io, 0 vm, 3 hdd

...

stress: info: [15227] successful run completed in 60sps -C stress -o command,pid,ppid$ ps -C stress -o command,pid,ppid

COMMAND PID PPID

stress --hdd 3 --hdd-bytes 20439 28913

stress --hdd 3 --hdd-bytes 20440 20439

stress --hdd 3 --hdd-bytes 20441 20439

stress --hdd 3 --hdd-bytes 20442 20439ltrace -ttT -p 20442$ ltrace -ttT -p 20442

21:55:29.709558 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <1.082627>

21:55:30.792425 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <1.049655>

21:55:31.842371 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <1.051572>

21:55:32.894085 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <0.985147>

21:55:33.879338 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <1.178622>

21:55:35.058034 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <1.200725>

21:55:36.258835 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575) = 1048575 <1.052971>

21:55:37.311914 write(3, "n{6\\Pavw[:mO4=ZvMD^bvU;e'Rrs^4LJ"..., 1048575 <no return ...>

21:55:38.274647 --- SIGALRM (Alarm clock) ---

21:55:38.293048 +++ killed by SIGALRM +++vmstat 3$ vmstat 3

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 0 375896 0 242044 0 0 9 16 11 4 2 0 96 0 1

1 0 0 378672 0 242048 0 0 0 0 447 821 6 1 92 0 1

0 0 0 378928 0 242068 0 0 5 284 675 1221 3 1 96 0 0

0 0 0 378540 0 242256 0 0 56 0 868 1613 6 1 90 0 2

0 4 0 112604 0 520804 0 0 88 100056 1180 1412 7 5 51 29 8

0 8 0 111740 0 524456 0 0 1366 125645 1399 1892 3 2 3 91 0

2 3 0 111452 0 527212 0 0 5846 121571 1439 2053 3 2 4 90 1

0 4 0 112792 0 527972 0 0 7755 122509 1966 3013 3 4 7 86 0

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

2 6 0 111972 0 527220 0 0 10786 115699 1816 2987 8 4 1 87 0

1 4 0 111384 0 514604 0 0 2480 127005 1318 1849 5 3 7 84 1

0 4 0 112620 0 516548 0 0 3893 124263 1406 2024 5 2 3 90 0

0 4 0 118080 0 517952 0 0 10951 115685 1654 2448 2 3 3 91 1

0 4 0 195412 0 443660 0 0 8517 120411 1737 2696 3 6 2 89 0

0 5 0 112300 0 530280 0 0 15232 113113 2826 5305 15 4 1 79 1

0 4 0 112428 0 526892 0 0 2473 125640 1273 1700 10 3 2 85 0

0 5 0 112312 0 525588 0 0 4328 123588 1493 2156 6 2 8 84 1iostat -x -d 3$ iostat -x -d 3

Linux 4.14.232-176.381.amzn2.x86_64 (vamocha-prod-gitlab-01) 11/18/2021 _x86_64_ (2 CPU)

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 9.33 0.00 105.33 0.00 22.57 0.00 0.86 0.86 0.00 0.29 0.27

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 2.67 17.33 582.33 504.00 139991.33 468.58 6.94 12.70 11.46 12.73 1.19 71.07

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 19.67 501.33 618.67 127612.00 492.25 11.77 24.45 17.97 24.70 1.82 94.80

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 103.67 486.67 4168.00 124010.67 434.26 11.64 21.40 7.95 24.26 1.65 97.47

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 1.33 139.67 495.00 5139.00 123138.67 404.24 12.20 20.89 6.02 25.09 1.55 98.53

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 1.00 278.33 461.33 12425.67 115744.67 346.56 7.03 10.78 6.09 13.61 1.27 93.60

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

nvme0n1 0.00 0.00 414.00 474.67 7652.00 120558.33 288.55 8.96 11.18 6.61 15.16 1.10 98.00시스템콜(strace)/라이브러리 함수 추척(ltrace) 명령

strace -ttT -f stress --hdd 3 --hdd-bytes 1024m --timeout 60sltrace -ttT -p 20442

728x90

'리눅스' 카테고리의 다른 글

| [draft] 우분투에서 Docker 데몬의 로그를 관리하는 방법 (0) | 2021.11.23 |

|---|---|

| [리눅스] 도커 컨테이너로 gitlab-runner 실행하는 방법 (1) | 2021.11.21 |

| CentOS 7에서 Created slice 및 Starting Session과 같은 로그를 제거하는 방법은 (0) | 2021.11.18 |

| iostat 명령어 (0) | 2021.11.18 |

| FTPS(FTP + SSL/TLS) 서버를 구성하는 방법(vsftpd) (0) | 2021.11.18 |